Meta AI enhances language support and introduces creative selfies with Imagine Yourself

Meta AI introduces expanded language capabilities and creative tools like Imagine Yourself for personalized selfies, alongside improvements in VR headset functionality with Meta Quest.

Meta AI, Meta’s AI-powered assistant, has undergone enhancements. The system now supports additional languages and generates stylized selfies. Furthermore, Meta AI users may now direct inquiries to Llama 3.1 405B, Meta’s newest flagship AI model. This model possesses the capacity to process queries of increased complexity compared to its predecessor.

Llama 3.1 405B is a language model with potential applications. Meta asserts that the model demonstrates particular proficiency in mathematical and coding queries. This suggests suitability for tasks such as mathematical homework, scientific explanation, and code debugging.

Access to Llama 3.1 405B is subject to limitations. Users must manually activate the model and query volume is restricted on a weekly basis. Following this threshold, Meta AI transitions to the Llama 3.1 70B model.

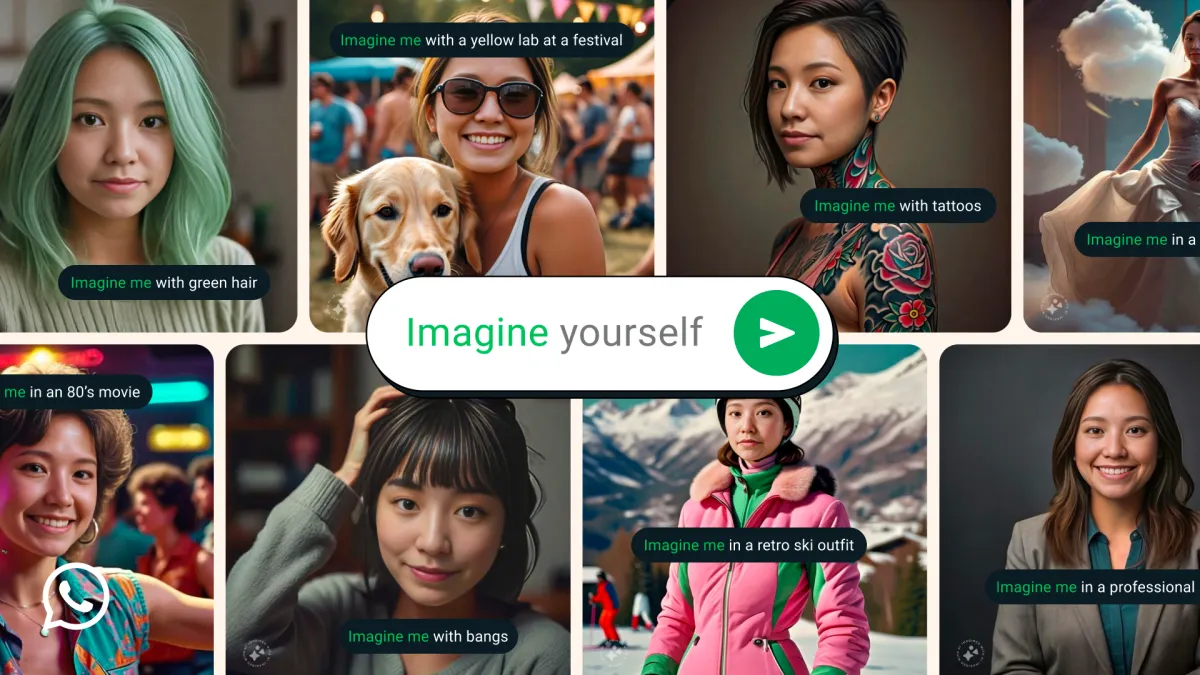

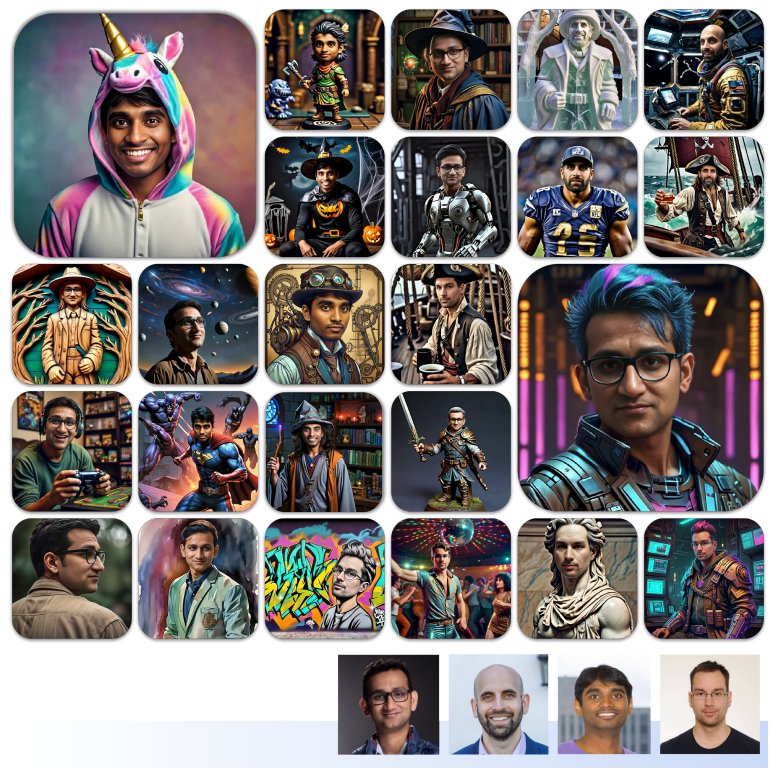

In addition to Llama 3.1 405B, Meta AI incorporates a generative AI model for the selfie feature. Termed Imagine Yourself, this model generates images based on a user-provided photograph and textual prompt. The feature is currently in beta and activated within Meta AI by preceding a non-NSFW prompt with "Imagine me."

The dataset utilized for training Imagine Yourself remains undisclosed. However, Meta's terms of use indicate the potential inclusion of public posts and images from its platforms. This policy, coupled with the opt-out process, has generated user discontent.

Complementing the Imagine Yourself feature, Meta AI offers new editing tools for image manipulation. Users may add, remove, modify, or edit objects through textual prompts such as "Change the cat to a corgi." An "Edit with AI" button, providing additional refinement options, will be introduced next month. Furthermore, Meta AI users will soon have access to shortcuts for sharing AI-generated images across Meta platforms.

Meta AI will soon replace the Voice Commands feature on Meta Quest's VR headset, starting with an upcoming rollout in the U.S. and Canada in "experimental mode." Users will have the ability to utilize Meta AI while passthrough is enabled, allowing inquiries about physical surroundings. Meta exemplifies this capability with an example where a user might request, "Look and tell me what kind of top would complete this outfit," while presenting a pair of shorts.

Currently, Meta AI operates in 22 countries, with recent expansions encompassing Argentina, Chile, Colombia, Ecuador, Mexico, Peru, and Cameroon. The assistant now supports several languages including French, German, Hindi, Hindi-Romanized Script, Italian, Portuguese, and Spanish, with additional languages forthcoming as stated by Meta.