Apple's Ferret-UI: AI for mobile UI understanding

Apple researchers have unveiled a novel AI model, Ferret-UI, capable of comprehension of on-screen content on mobile devices. This multimodal large language model (LLM) represents the latest advancement in a series of models under development.

Apple researchers have unveiled a novel AI model, Ferret-UI, capable of comprehension of on-screen content on mobile devices. This multimodal large language model (LLM) represents the latest advancement in a series of models under development.

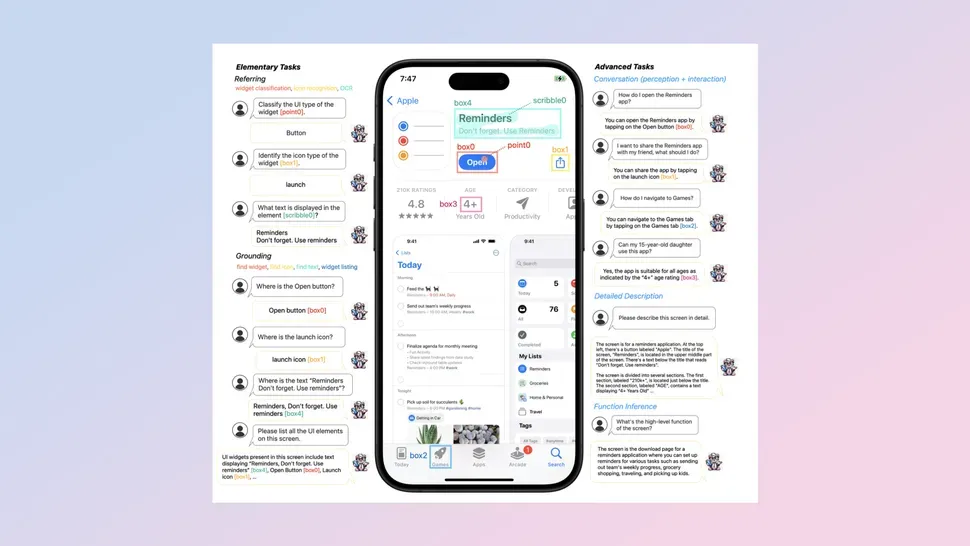

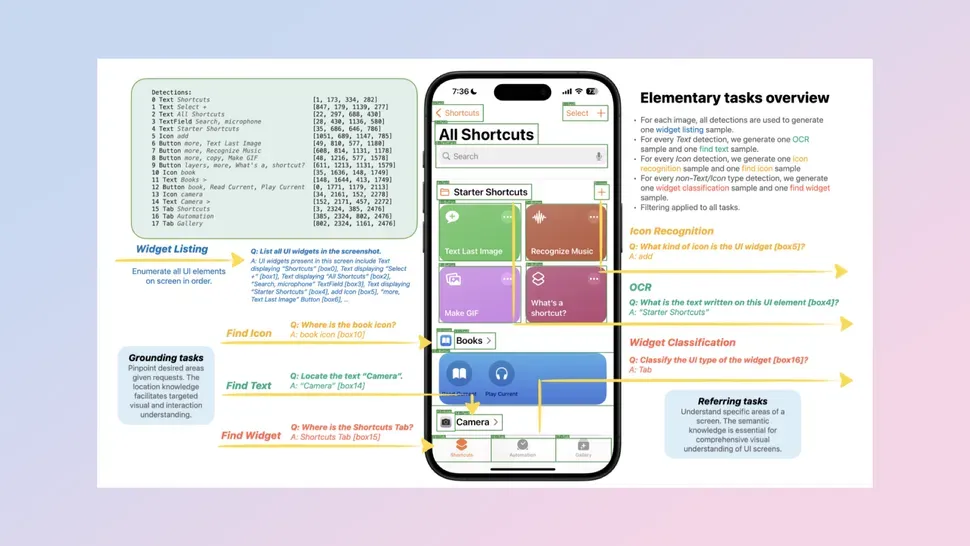

Apple has introduced Ferret-UI, a novel multimodal large language model (MLLM) designed for comprehensive understanding and interaction with mobile user interfaces (UIs). This research, documented in a recent paper, details Ferret-UI's capabilities in analyzing on-screen content. The model can identify icon types, locate specific text elements, and generate precise instructions based on the user's current UI context. These advancements position Ferret-UI to play a significant role in the future of human-computer interaction on mobile devices.

The future integration of Ferret-UI with rumored updates to Siri 2.0 or its status as a standalone research project remains undetermined.

Advancing phone interaction

Modern smartphones serve as versatile tools, facilitating tasks such as information retrieval or reservation creation. Users navigate UIs through visual inspection and interaction with buttons. Apple envisions a future where this process is streamlined through automation. The introduction of Ferret-UI signifies a step toward this goal.

Ferret-UI, a multimodal large language model (LLM), is designed for comprehensive understanding and interaction with mobile UIs. This model addresses key challenges: comprehending the entirety of the on-screen content, focusing on specific UI elements, and aligning natural language instructions with the visual context. Beyond user experience improvements, potential applications of Ferret-UI extend to accessibility, app testing, and usability studies.

In a demonstration of its capabilities, Ferret-UI was presented with an image of AirPods displayed within the Apple Store application. The model accurately identified the appropriate action for purchase, indicating that the user should tap the "Buy" button.

The ubiquity of smartphones has driven research into tailoring AI functionalities for these devices. Meta Reality Labs anticipates a future where users dedicate over an hour daily to direct chatbot interactions or background LLM processes powering features like recommendations. Meta's chief AI scientist, Yann Le Cun, even suggests a future where AI assistants curate our entire digital experience. While Apple has not disclosed specific plans for Ferret-UI, the model's potential to enhance Siri and streamline the iPhone user experience is evident.