Meta Updates AI Labeling Policy to Expose Generated Content

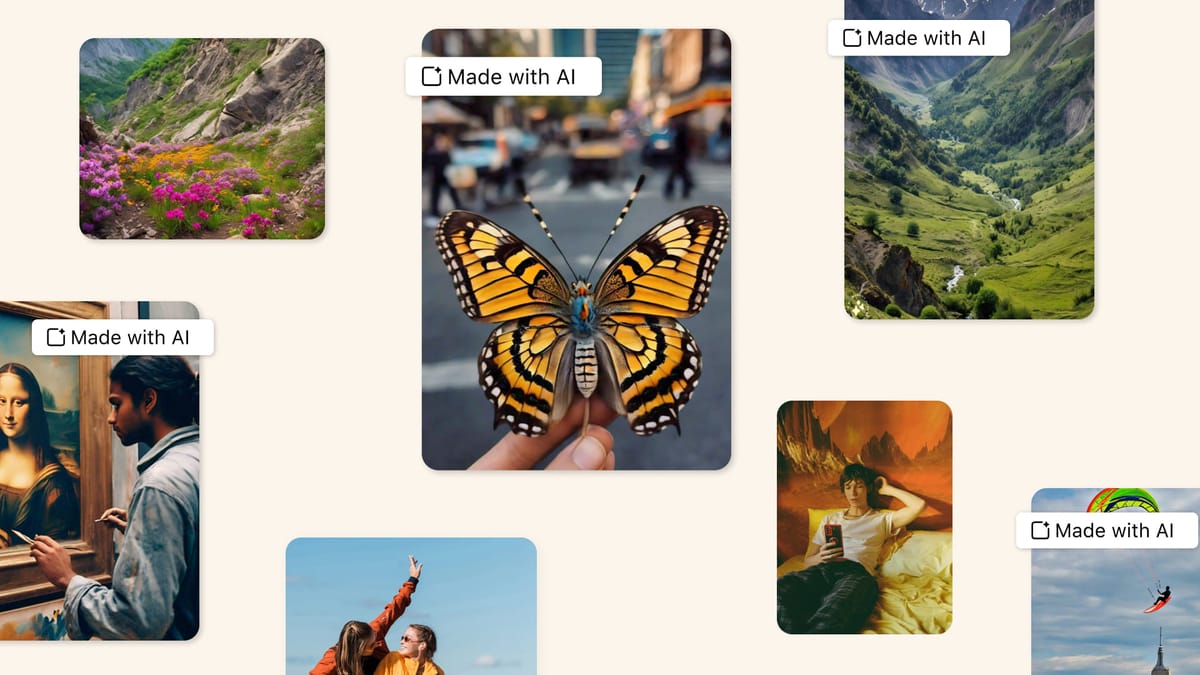

Meta to update AI content labeling on platforms. New approach aims to identify broader range of synthetic content, including images, video and audio, generated by artificial intelligence. Labels will inform users and promote transparency.

Meta Platforms Inc. (Meta) is updating its approach to identifying and disclosing synthetic content on its social media applications, including Facebook. This update comes in response to the increasing prevalence of posts created using generative artificial intelligence (AI).

Previously, Meta's focus was primarily on identifying and removing deepfake videos – videos manipulated using AI to make it appear as if someone is saying or doing something they did not. The revised policy acknowledges the evolving landscape of generative AI and aims to encompass a broader range of AI-generated content, including images and audio.

Under the new policy, content identified by Meta's detection systems or disclosed by users as being generated with AI will be labeled accordingly. These labels will utilize industry-standard indicators to inform users about the content's artificial origin.

This shift in strategy reflects Meta's commitment to providing users with greater context and transparency regarding the content they encounter on its platforms. By leaving most AI-generated content accessible but clearly labeled, Meta aims to empower users to make informed judgments about the information they consume.

However, the effectiveness of this approach hinges on Meta's ability to accurately detect AI-generated content. As AI technology continues to develop, so too will the sophistication of synthetic media. Meta acknowledges this challenge and suggests that a combination of automated detection and user disclosure will be employed.

The updated policy is scheduled to be implemented in May 2024. Its impact on user awareness and content moderation practices within the social media landscape remains to be seen.