OpenAI announced GPT-4 | New model recognizes images and explains memes

GPT-4 can now work not only with text, but also with images. It will be able to analyze drawings and generate code from them, explain memes, and much more.

OpenAI has announced the next generation of the AI language model, ChatGPT-4. Its main feature is that it can now work not only with text, but also with images. For example, GPT-4 will be able to analyze drawings and generate code from them, explain memes, solve problems, explain instructions for devices in a clear language, and much more.

ChatGPT has shown incredible success before: writing diplomas and articles, solving homework and putting Google's business at risk. But the release of this particular model, the technical director of Microsoft Germany Andreas Braun called "a game-changer."

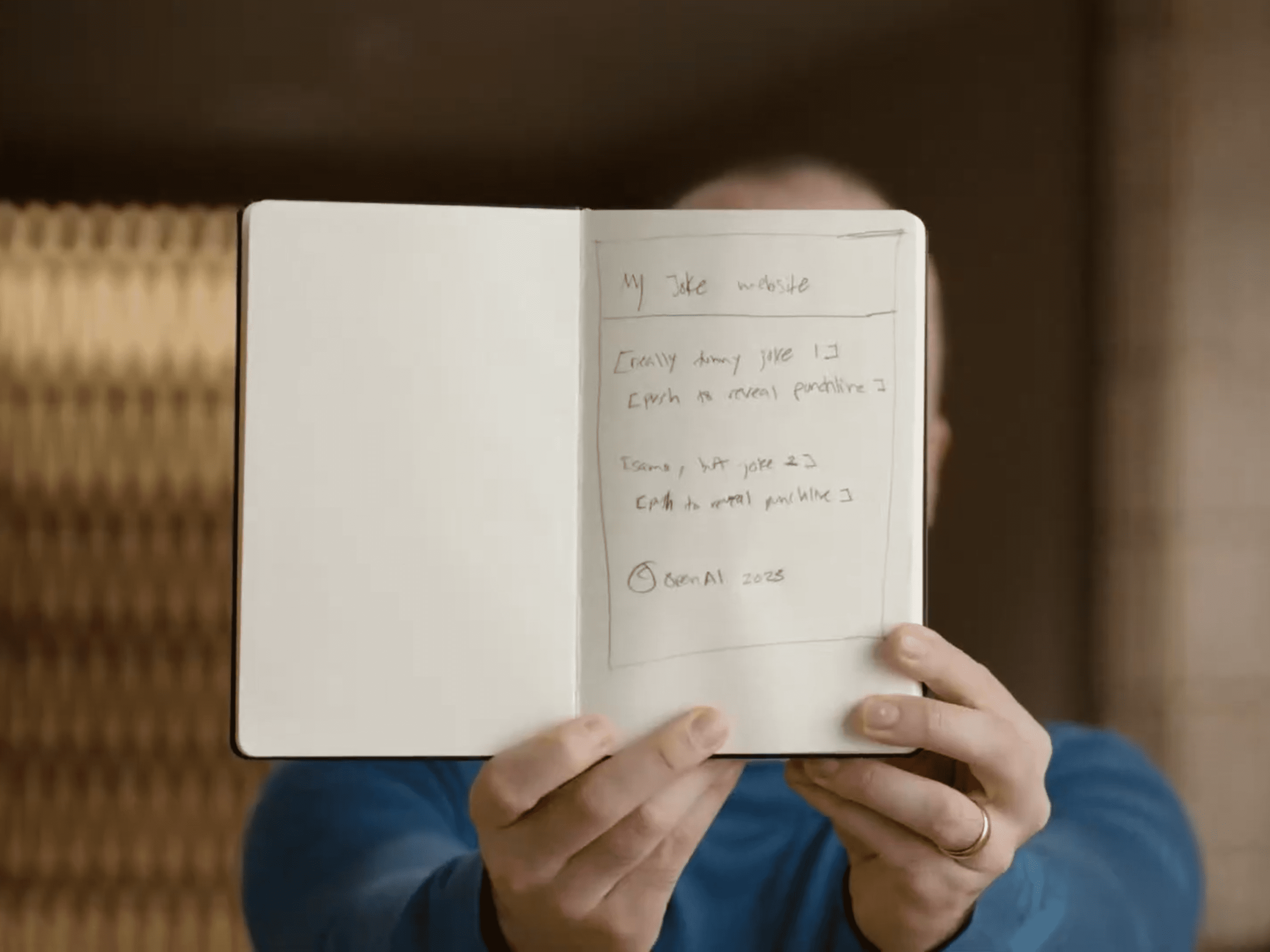

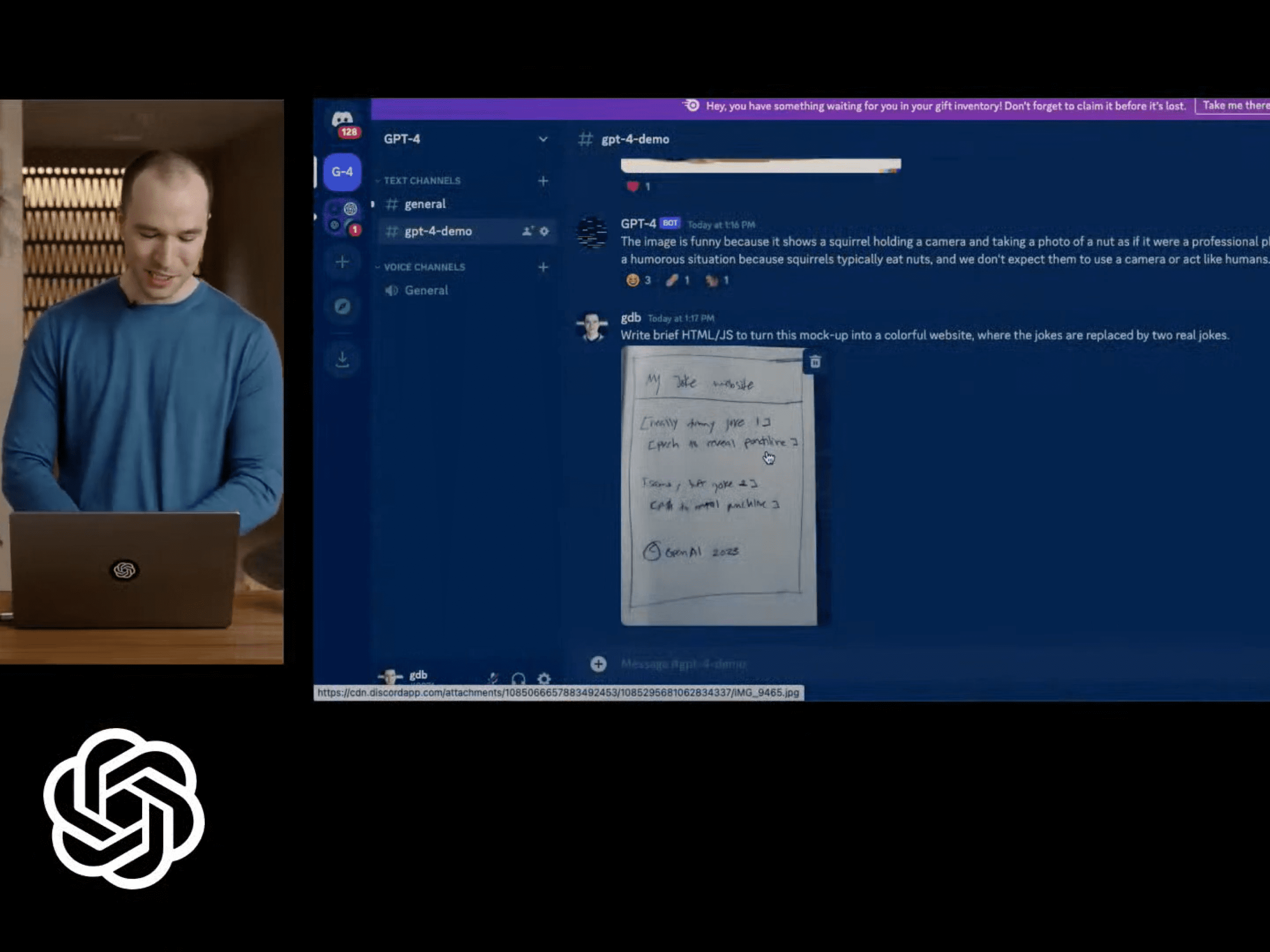

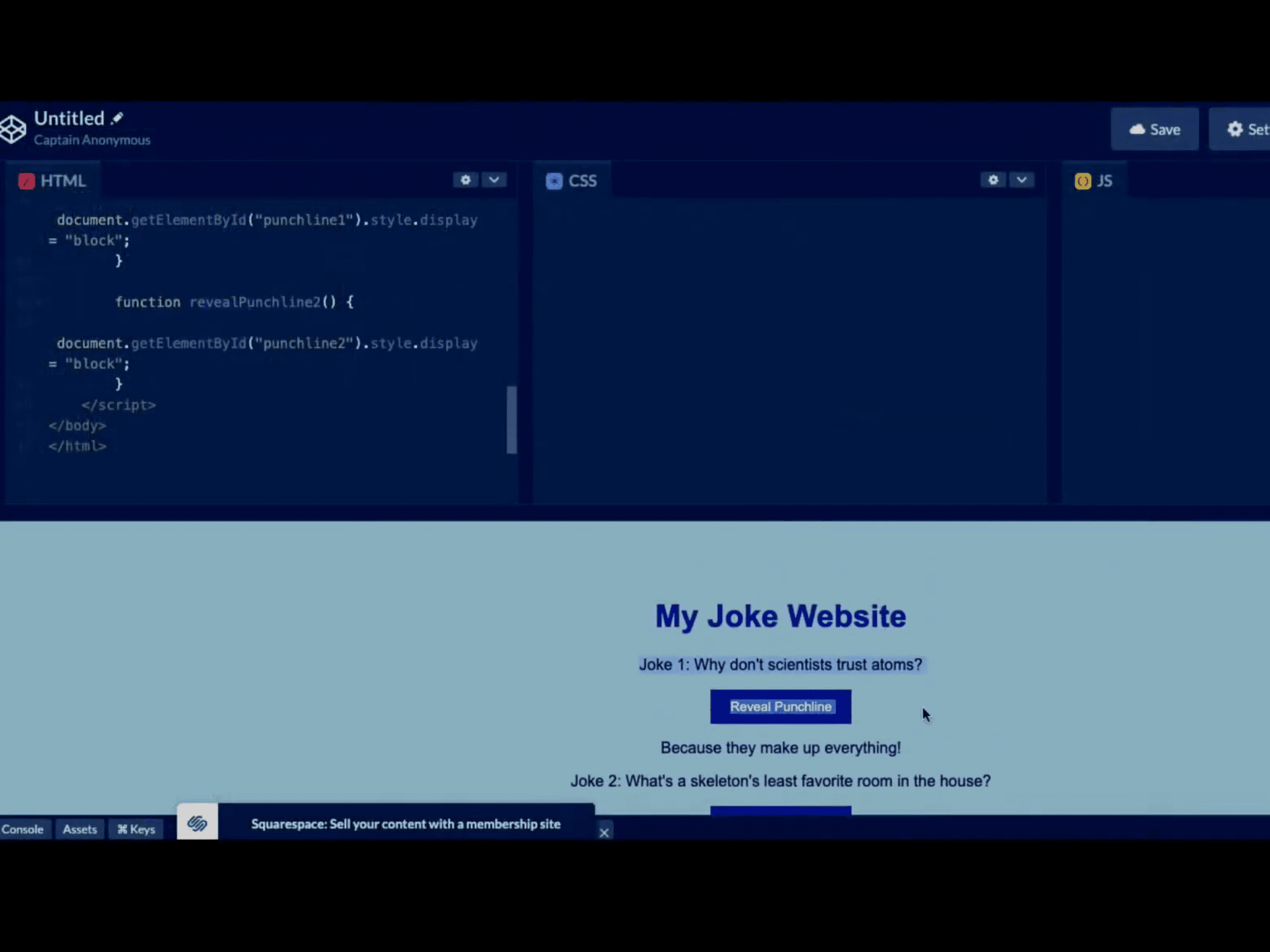

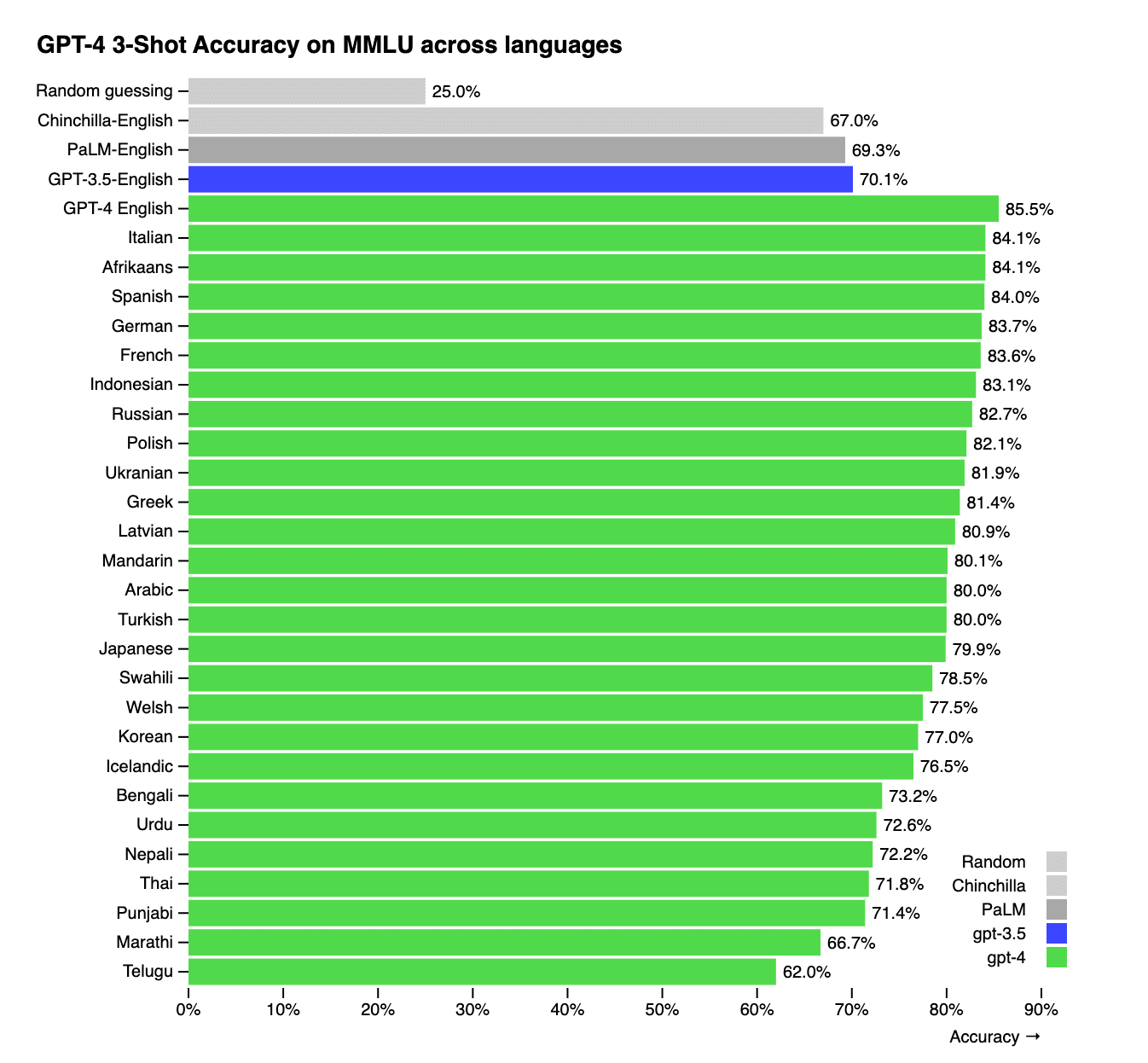

At the presentation of ChatGPT-4, the presenter photographed a simple sketch of a site drawn by hand, and the neural network recognized the image and generated the code for this layout. The new model is "more creative" and "can solve complex problems with greater precision," the company says. Also now GPT-4 performs tasks in all languages. For example, if the query is in German, the result can be obtained in Italian.

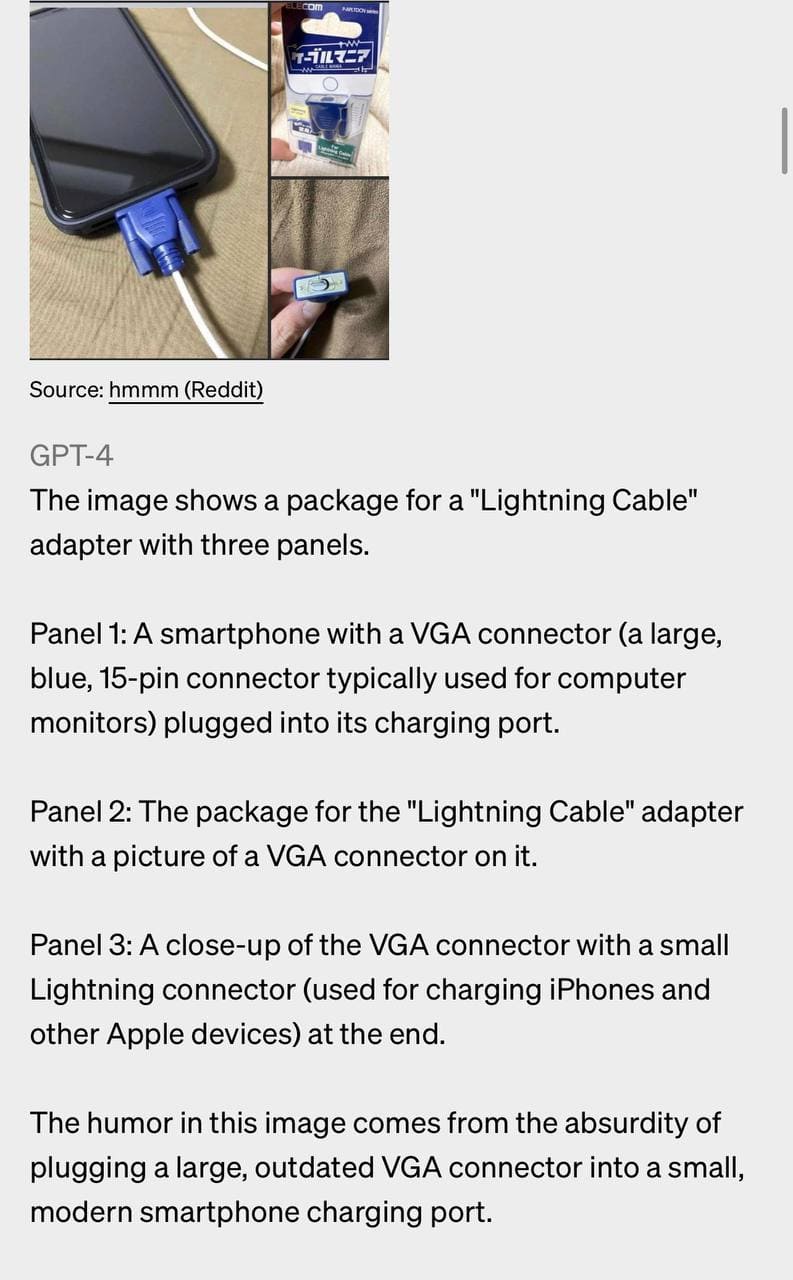

OpenAI showed examples of the work of GPT-4, where AI was given various tasks: describe what it saw, solve the problem, choose "what is unusual in the photo" and explain the joke. The model coped with everything with a bang. The GPT-4 is capable of showing human-level performance across a variety of professional and academic indicators.

The new model is already being used by Microsoft's Bing search engine and is also available to ChatGPT Plus users with a monthly subscription. Everyone else will have to sign up for a waiting list to try out the new model. Looks like Microsoft and other OpenAI investors are about to take off!

US Department of Justice to block Adobe's $20 Billion Figma Acquisition

— UX News (@uxnewscom) February 26, 2023

DOJ to block the takeover of the design service Figma by Adobe for $20 billion and is now preparing an antitrust lawsuit.

Read more: https://t.co/Iz8YM1ZkIE